AI 101: ML vs LLM vs NarrowAI vs AI vs AGI vs Conscious AI

1. The Spectrum of Artificial Intelligence 🧠

Artificial Intelligence (AI) is the broad scientific field dedicated to creating systems capable of tasks that typically require human intellect. This "intelligence" is not monolithic; it exists on a spectrum of capability and generality.

1.1. Narrow AI (ANI): The Present Reality

All AI systems currently in existence are Narrow AI (or Weak AI). They are specialists, designed and optimized for a single job or a very limited set of tasks. While they can achieve superhuman performance in their specific domain, they cannot generalize that intelligence to other problems.

Key Characteristics: Task-specific, lacks general adaptability, operates based on its training data and algorithms.

Common Examples:

Voice Assistants like Siri and Alexa that respond to voice commands.

Recommendation Engines on Netflix or Amazon that predict what you'll like.

Autonomous Vehicles, which are highly specialized for the single, complex task of driving.

Large Language Models (LLMs), like the technology behind ChatGPT, are a highly advanced form of Narrow AI, expertly trained on the specific domain of human language.

1.2. Artificial General Intelligence (AGI): The Research Horizon

Artificial General Intelligence (AGI), or Strong AI, describes a theoretical future AI with the flexible, adaptive intelligence of a human. An AGI could understand, learn, and apply knowledge across a vast range of different domains, not just the ones it was specifically trained for.

Key Characteristics:

Generalize Learning: Can apply skills from one field to another.

Common Sense Reasoning: Understands the world in a holistic, intuitive way.

Adaptability: Can learn from experience and handle unpredictable situations.

Current Status (Late 2025): No true AGI exists. We are in an era of "proto-AGI" systems—frontier models that exhibit sparks of general reasoning but still lack genuine understanding and autonomous, continuous learning. A major debate in the field is whether simply scaling up current models will lead to AGI or if entirely new scientific breakthroughs are needed.

1.3. Self-Conscious AI: The Realm of Speculation

Beyond AGI is the deeply philosophical concept of an AI with sentience, consciousness, and self-awareness. Such a being would have subjective experiences, feelings, and an awareness of its own existence.

Current Status: This is pure science fiction for now. There is no known scientific path from today's AI to consciousness, and the idea raises profound ethical questions about personhood and morality that remain theoretical.

2. Foundational Pillars of AGI Development

Achieving AGI is not merely an engineering challenge; it's a deep scientific endeavor requiring breakthroughs in our understanding of intelligence itself.

2.1. Cognitive Architectures

This is the "blueprint of a mind," defining how an intelligent system is structured.

Symbolic AI (Legacy Approach): Early AI research (e.g., Cyc, Soar) focused on explicit rules and logical reasoning. While often brittle, the concepts of structured knowledge remain relevant.

Connectionist AI (Modern Approach): This is the foundation of deep learning, using neural networks to learn patterns from data. LLMs are the current pinnacle of this approach.

Hybrid Architectures: Many researchers believe AGI will require a hybrid that combines the pattern-matching strengths of neural networks with the robust reasoning of symbolic systems.

2.2. Essential Capabilities Beyond Current AI

To bridge the gap from ANI to AGI, future systems must master several key abilities:

Continuous and Lifelong Learning: The ability to learn incrementally from new experiences without needing a full retraining and without "catastrophic forgetting" of previous knowledge.

Transfer Learning & Analogy: The capacity to take knowledge learned in one domain and apply it to a completely different, novel problem.

Common Sense Reasoning: The vast, implicit understanding of how the world works that humans use to navigate everyday life.

Embodiment and Grounding: The theory that true understanding requires intelligence to be "grounded" through interaction with the physical world via sensors and actions, not just by processing abstract data.

3. The Engine of Modern AI: Machine Learning & LLMs

Machine Learning (ML) is the engine driving modern AI. Instead of being programmed with explicit rules, ML systems learn patterns directly from data. Deep Learning, a subfield of ML that uses complex, multi-layered neural networks, is the foundational technology behind today's most advanced AI, including LLMs.

3.1. The Essence of LLMs: Sophisticated Prediction

At their core, LLMs are advanced prediction engines. When given a prompt, an LLM uses its statistical understanding of language, learned from trillions of words, to predict the most probable next word in the sequence. It repeats this process word by word to generate coherent and contextually relevant text.

3.2. The Two-Phase Training Process

LLMs undergo a massive, two-stage training process:

Pre-training (Unsupervised): The model is fed a vast dataset from the internet, learning grammar, facts, and reasoning patterns on its own. This builds its foundational knowledge.

Alignment (Supervised): After pre-training, human reviewers fine-tune the model. Using techniques like Reinforcement Learning from Human Feedback (RLHF), they guide the model to be more helpful, truthful, and harmless, effectively "aligning" it with human values.

3.3. Key Architectural Pillars

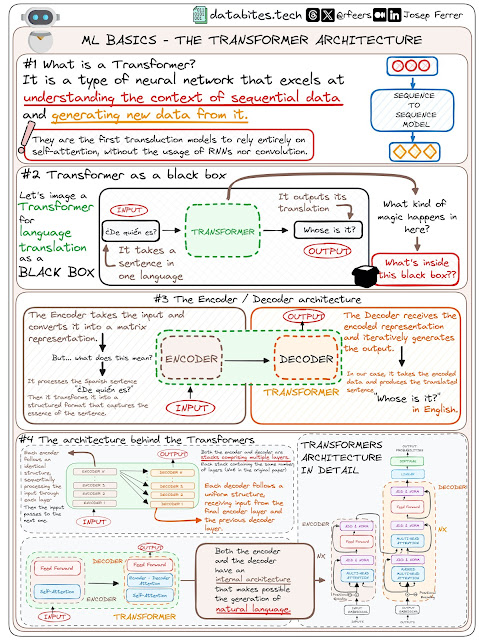

Modern LLMs are built on the Transformer architecture, which is exceptionally good at processing language thanks to a key innovation:

Attention Mechanism: This allows the model to weigh the importance of different words in the input when generating a response. It can "pay attention" to the most relevant parts of the context, no matter how far back they appeared, which is crucial for maintaining long, coherent conversations.

4. The Frontier: From Language Models to Agentic Systems

The cutting edge of AI is moving from simple chatbots to autonomous agentic systems. These systems use an LLM as a "cognitive core" or reasoning engine to plan, use tools, and accomplish complex, multi-step goals.

4.1. The Architecture of a Modern AI Agent

An AI agent is a complex system of interconnected modules:

LLM Reasoning Core: The central "brain" that plans and breaks down goals.

Retrieval-Augmented Generation (RAG): To provide the agent with factual, up-to-date knowledge, it connects to an external vector database (e.g., Pinecone, Weaviate). Before answering a question, it "retrieves" relevant information to ground its response in reality, drastically reducing factual errors.

Memory Systems: Agents have short-term memory (the current conversation) and long-term memory (a database of key facts and user preferences) to maintain context over time.

Tool Use: This is the agent's ability to perform real-world actions by connecting to external APIs. It can browse the web, run code, send emails, or interact with other software.

AI Collaboration (Multi-Agent Systems): An advanced concept where multiple specialized AI agents collaborate. For example, a "researcher" agent could gather information, a "writer" agent could draft a report, and an "editor" agent could refine it, working together to achieve a goal.

4.2. Practical Development: Costs, Timelines & Tools

Hardware & Costs:

Frontier AGI Research: Requires massive GPU clusters (NVIDIA H100/B100 or successors) costing $100 million to over $1 billion.

DIY / Small-Scale Development: A high-end PC with a powerful GPU ($5,000 - $15,000) or rented cloud GPUs can be used to fine-tune existing open-source models (like Llama 3) and build powerful agents.

AGI Arrival Projections (Highly Speculative):

Median Expert Forecast: Most AI researchers surveyed place the probability of achieving human-level AI at 50% by the year 2047.

The Modern Software Stack:

Language: Python is the industry standard.

Frameworks: PyTorch is dominant for research, with JAX gaining popularity.

Libraries: Hugging Face is essential for accessing pre-trained models. LangChain and LlamaIndex are crucial for building agentic applications and implementing RAG workflows.

5. Societal Impact & Ethical Imperatives ⚖️

The power of AI comes with immense responsibility. Addressing its ethical and societal impact is a central challenge for the field.

5.1. AI as a Tool for Human Augmentation

AI is a transformative tool for amplifying human creativity and productivity. It can help us brainstorm ideas, automate tedious work, and translate concepts into concrete drafts, freeing us up for higher-level strategic thinking.

5.2. Critical Ethical Challenges

Bias and Fairness: AI models can learn and amplify harmful societal biases present in their training data. Mitigation requires careful data curation, algorithmic debiasing, and continuous auditing.

Misinformation: AI can generate convincing but false information ("hallucinations") and can be used to create propaganda or deepfakes at an unprecedented scale.

Job Displacement: AI is likely to automate many jobs, potentially leading to significant economic disruption and worsening inequality if society doesn't adapt with new educational and social support systems.

Misuse (Label: Malicious Use / Illegal): Advanced AI could be used for autonomous weapons, sophisticated cyberattacks, or mass surveillance, requiring strong governance and regulation.

The Alignment Problem (Label: Potential Existential Risk): A long-term concern for AGI is ensuring its goals remain aligned with human values. A superintelligent AI pursuing a poorly defined goal could have catastrophic, unintended consequences, even without malicious intent. Organizations like the Machine Intelligence Research Institute (MIRI) and the Future of Life Institute are dedicated to this problem.

5.3. The Path to Responsible AI

Ensuring AI is developed safely and ethically requires a collective effort:

Ethical Design: Building human values, fairness, and transparency into AI systems from the start.

Robust Governance: Creating thoughtful regulations and industry standards to guide AI's deployment.

Interdisciplinary Collaboration: Fostering teamwork between AI researchers, ethicists, social scientists, and policymakers.

Public Education: Creating an informed public that understands AI's capabilities and limitations is essential for navigating the future.